Day 4: My Job Scraper Plan Keeps Changing (And That’s Okay) Auto-Discover, LLM, Microservices, And More

Day 4 done.

No code today, just research.

Honestly felt like I did less work than previous days, but research is work.

Here’s what happened:

Contents

The Research Rabbit Hole

Started with: “What’s already out there?”

- Found Apify (scraping platform) but it’s not tailored for my use case

- Asked AI if I could build my own → “Yes, but…”

Key pivot: Skip job aggregators (LinkedIn, Indeed). Go straight to company career pages.

Why?

- Fresher data – Direct from source, no stale listings

- Lower legal risk – Career pages are public, aggregators have stricter ToS

- More reliable – No middleman filtering/changes

The Auto-Discovery Dream (That Died)

Wanted my crawler to discover new companies automatically after I seed it with 500. Reality check:

- Each company’s career page has unique HTML structure

- Writing 500 custom scrapers = impossible

- One HTML change breaks everything

Lesson learned: Start simple. Manual list of 500 companies → build general scraper → worry about discovery later.

LLM Scraping: Cost Nightmare

Thought: “Use LLM vision to parse any career page automatically!”

Reality:

- OpenAI GPT-4V costs ₹10-20 per page

- 500 companies = ₹5,000-10,000 just for testing

- Free quotas run out before I even finish 50 companies

New plan: Custom open-source LLM (haven’t picked model yet) running locally first. Generate a base dataset of 500 companies on localhost, then deploy a lean version.

Microservices Panic (Amazon Prime Video Article)

Saw headline: “Amazon Prime Video ditches microservices for monolith.”

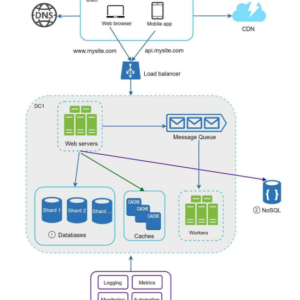

Panicked hard. Does this mean my 4-service plan is wrong?

(It was almost 2 years old news! I guess because I search for system design a lot these days, I got it now. This article made it pretty clear. They shifted what wasn’t the right fit for microservices, not everything.)

Reality check: They moved video processing (huge files, massive data transfer costs) back to monolith. My job listings are text data. Standard microservices still make sense.

Final Plan (For Now)

Phase 1 (Local, Days 6-15):

- Build a 500-company list (already started, need expert guidance)

- Pick/deploy open-source LLM locally

- Build scraper for 10 companies → validate → scale to 50 → 500

- Design a database schema based on real scraped data

- Generate “base dataset” for production

Phase 2 (Deployed, Day 20):

- Deploy lean crawler (no LLM vision)

- Live job aggregator service

Two Big Doubts Before Coding

- Legal compliance – Can I scrape career pages? What’s fair use?

- robots.txt – How strictly must I follow? What if companies block me?

Tomorrow (Day 5)

- Deep dive on legalities + robots.txt

- Finish 500 companies list (need variety: startups, product companies, MNCs)

- Pick the LLM model

Day 6: Finally, code something. (Can’t wait to see how awesome it will be this time!!)

What I learned today: Building a project is different from building a product that is supposed to be used by real users. You see ample of pivots and have 1000s of questions. If you asked me a week ago to build the entire HuntKit, I would say not a day more than 7. But then, who could have used it? Maybe, even I won’t use it every day.

Honest question: Have you built production scrapers? How did you handle legal risks?