Hello Pythonistas🙋♀️, welcome back.

Today we are going to discuss requests and beautifulSoup 🍲 modules in python.

We will see how you can extract a web page’s HTML content with the requests module and then parse through its content using beautifulSoup.

We will understand this by comparing two books📗📘 on an e-commerce 🛍️ website. In terms of price 💵.

We would focus mainly on understanding how to work with these modules and not on the comparison logic.

Contents

Previous post’s challenge’s solution

Here’s the solution 🧪 to the challenge provided in the last post:

import re

def URLExtracter(text:str) -> list[str]:

"""This function takes a text string and returns a list of URL(s).

It is a basic implementation.

It doesn't work in advanced scenarios.

It uses regular expressions to extract URL(s).

Args:

text: (str)

Returns:

list of URL(s): list(str)

"""

urls = []

url_pattern = r'https?://www\.[A-Za-z0-9_-]+\.[a-zA-Z]+'

urls_iter = re.finditer(url_pattern, text)

for i in urls_iter:

urls.append(text[i.span()[0]:i.span()[1]])

# urls = [m.group() for m in urls_iter] can be done this way too

return urls

text = """This is a sample text with URLs. Here are a few URLs you can extract:

- http://www.example.com

- https://go0gle.com

- http://www.subdomain.example.com

- http://www.another-example-site.net/path/page.html

- https://www.testsite.org

- www.invalidurl (invalid URL without protocol)"""

print(URLExtracter(text))Please read the comments in the code to understand it clearly.👍 If you still have any doubts ask them in the comment section below.👇

What is the requests module?

So, have you ever used a website🌐?

In that case, you have either typed ⌨️ something on your browser’s URL search bar like this: https://www.wikipedia.org/. Or you have clicked 🖱️ a website that came in your search 🔍 result.

In both cases, you are requesting a website’s server🗄️ to give you something it has(image🌅, video, text, link anything).

When you click them you get some webpage(usually). Here the server 🗄️ of that website has responded to you.

The request module in python helps you deal 🤝 with these HTTP requests and responses.

Note: you can give requests and get responses.

How, well with a lot of methods. Two widely used ones are:

- get()

- post()

get()

The get method helps you get the HTML </> of a webpage📃. When you sent a get request to a webpage you get its source code(HTML only) as the response.

Let’s see 👀 it in practice. We will use a widely used website for practicing this: https://httpbin.org/

First, install the module and then import it:

pip install requestsimport requestsNow, give in the URL to the get() method:

import requests

responses = requests.get("https://httpbin.org/")

print(respones.status_code)Here, the status code is to tell if the page was accessed successfully 👍 or not. If you get output 200 you did it✅. If not check your internet🌐 connection or your code.

But how do we get the HTML code </> of this page?

import requests

responses = requests.get("https://httpbin.org/")

print(respones.text)Where will we use this? Well, you’ll get the answer in this case where we will see an application of this module.

Note: This module can work fast/slow based on your internet speed.

post()

The post() 📬 method helps you send a post request to a web page’s server🗄️. This means you want to add ➕ something to that page.

For this, we would need a page that accepts👍✅ requests like that: https://httpbin.org/post

We provided only the URL to the get() method. But, we need to provide some data to be sent to the post() method along the URL(usually JSON or dictionary).

responses = requests.post('https://httpbin.org/post', data ={'name':'age'})To see if you succeeded just print out the responses variable:

print(responses)Now to see what is the response actually:

print(responses.json())You’ll see 👀 the key:value pair you gave in the dictionary.👍

What is the BeautifulSoup 🍲 module?

You got the HTML code in the form of a string. But isn’t it difficult 😕 to read, understand, and access? Seems like a tough and manual task to deal 🤝 with it.

Don’t worry, as always there is a Pythonic ✅ way to do it with a module called BeautifulSoup 🍲.

BeautifulSoup is a module used to parse 🔍 through HTML or XML, it can also help even when the document is malformed ☠️ (like if it doesn’t have closing tags).

Let’s first see how you can create a soup using this module to parse through the data.

Creating a soup

Aren’t you wondering what is this “soup”? It shouldn’t be a soup with veggies.

Well, it is the parsed representation of an HTML or an XML document.

In simple words, it is a structured and organized version of an HTML or XML document after it has been processed and analyzed by the parsing ⚙️ engine.

We can find specific tags’ content using the soup 🍲 and can also have a better-looking 👌 version of the HTML string.

To create a soup first install beautifulsoup4:

pip install beautifulsoup4We just need to import the BeautifulSoup 🍲 class from this:

from bs4 import BeautifulSoupNow, we are ready to make(cook)😋 our soup:

response = requests.get("https://httpbin.org/")

soup = BeautifulSoup(response.text, 'html.parser')Here, the first☝️ argument is the HTML content, and the second ✌️ argument specifies the parser to be used. In this case, we’re using the 'html.parser' it is a parser, provided by BeautifulSoup.

That’s it! You now have a soup😋🍲 object named soup that represents the parsed HTML content.

working On the soup

Now, let’s try to print this HTML content in a formatted manner:

print(soup.prettify())This function is used to prettify the HTML structure and make it more 🐱👓 readable.

It adds indentation and line breaks to the HTML code, improving its visual representation.

It is particularly helpful when you want to inspect 🔍 the structure of the parsed HTML content.

Let’s try to find an h2 tag in this document that has class title. We will use the find() function for this purpose:

h2_title = soup.find("h2", {"class": "title"})

print(h2_title)Here the h2 argument represents the tag name and the dictionary is to target the tag with the given specific class. You can also access tags with a particular id this way.👍

To access the content enclosed </> in this tag we would write✍️:

print(h2_title.text)Now, that we have gained a basic understanding of these two modules, let’s go on implementing these modules for better and faster 💨 understanding.

Comparing 📊 two books on an e-commerce

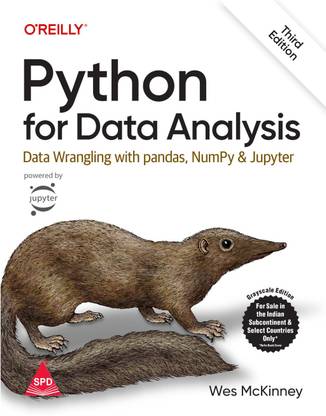

These are the two✌️ books we will be comparing(we will compare their price💵):

I’ll take their information from Flipkart.

Let’s first start by extracting their HTML content with the requests module.

r = requests.get('https://www.flipkart.com/python-data-analysis-wrangling-pandas-numpy-jupyter-third-grayscale-indian-edition/p/itmc018385d4765d')

r2 = requests.get('https://www.flipkart.com/big-data-analysis-python/p/itmfex3ddpgejnyy')Now, let’s parse these pages’ content.

soup1 = BeautifulSoup(book1.text, 'html.parser')

soup2 = BeautifulSoup(book2.text, 'html.parser')Now, let’s find the price💵 of these books📚. To do this we will need to inspect🔎 the page and see what tag holds the price information.

A div tag with classes _30jeq3 and _16Jk6d has the information about their prices.

b1Price = soup1.find("div", {"class": "_30jeq3 _16Jk6d"})

b2Price = soup2.find("div", {"class": "_30jeq3 _16Jk6d"})Note: classes are separated by space in HTML.

We would need to convert💱 these 💵prices to integers to compare them.

For that, we would need to remove the first element and the element on the second index which is a comma.

p1 = int(b1Price.text[1:2]+b1Price.text[3:])

p2 = int(b2Price.text[1:2]+b2Price.text[3:])Now, let’s compare:

if(p1 > p2):

print("Book 1 is costlier")

elif(p1 < p2):

print("Book 2 is costlier")

else:

print("Both have same price")When you run this code </> you’ll see the output:

Book 2 is costlierThat’s it for this post.

Conclusion

Remember this is just an introduction to these powerful modules. We will dive deep into them if you want to.

Here we compared two products on an e-commerce website to get an understanding of python’s requests and BeautifulSoup module.

Official documentation of requests module.

Official documentation of BeautifulSoup module.

Challenge 🧗♀️

Your challenge is to compare these laptop cases from eBay in terms of no. of products sold:

links to products: https://www.ebay.com/itm/353309729543 and https://www.ebay.com/itm/234772625497

Happy solving…

Stay happy 😄 and keep coding and do suggest any improvements if there.

Take care and have a great 😊 time I’ll see you soon in the next post…Bye Bye👋