More Code, Less Load

Today, I learned something that completely flipped my perspective on writing code.

In my Data Structures and Algorithms (DSA) class, the first thing we discussed was this:

“Sometimes, 100 lines of code are better than 2.”

At first, that felt strange. Isn’t writing less code a good thing? We’re taught to optimize, to be concise, to find one-liners and clever shortcuts. But in real-world applications, especially the ones we want to scale, this mindset can backfire.

Let me explain — not just with theory, but with a real example from something I’m building.

More Code, Less Load

Contents

Real-World Example: My Keyword Research Tool

I’m currently building a keyword research tool — something that helps users discover trending or relevant keywords by crawling sources like Google, Reddit, and various forums.

🧠 The Naïve Way (a.k.a. The 2-Line Mindset)

Every time a user enters a keyword, we could do this:

results = crawl(keyword)

return resultsDone! Just two lines.

But here’s why this is a bad idea:

- ❌ Every query triggers a full web crawl — slow and expensive.

- ❌ If 10 users search the same keyword, it repeats the same crawl.

- ❌ No learning from past queries.

- ❌ Users get delayed results every single time.

Short and sweet, yes. But also dumb and inefficient.

The Smarter Way (a.k.a. The 100-Line Mindset)

Instead, here’s what I’m doing now — and yes, it takes more code, but also makes the system smarter, faster, and more user-friendly.

✅ Step 1: Cache Inputs

When a user searches a keyword:

- Store it in a PostgreSQL database.

- Record a timestamp and keyword metadata.

This way, we start building memory.

✅ Step 2: Check Before Crawling

When a search request comes in:

- First, look it up in the database.

- If data exists and is recent (e.g. < 14 days), return it.

- If not found or too old → then trigger the crawler.

✅ Step 3: Recrawl Smartly

- Periodically refresh older keywords.

- Prioritize high-traffic or trending terms.

- On launch, pre-crawl popular niches for instant results.

✅ Step 4: Serve Results Fast

Now:

- Users get near-instant results for previously searched terms.

- The system maintains freshness without crawling everything all the time.

- Server load is reduced massively.

More code? Definitely.

But also:

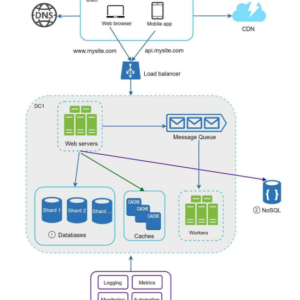

- Smarter architecture.

- Better performance.

- Happier users.

Key Takeaways

- ✨ Less code ≠ better code.

- ✨ Systems that scale need logic, structure, and memory.

- ✨ What looks like “extra effort” often leads to long-term efficiency.

- ✨ Good engineers don’t just write code — they design systems.

Final Thoughts

Learning DSA gave me more than just theory.

It showed me why things like caching, memory usage, and data structures actually matter in real-world projects.

Now, every time I decide whether to go for the “2-line shortcut” or the “100-line system,” I’ll ask myself:

“Do I want to write less code, or do I want to build better tools?”

I’m choosing better tools — even if it takes 100 lines to get there.